Researchers at MIT’s Computer Science and Artificial Intelligence Laboratory (CSAIL) have developed a novel metric, ‘minimum viewing time’ (MVT), to gauge the complexity of image recognition for AI systems. This metric measures the time required for accurate human identification of an image, offering insights into why some images are harder for AI to recognize than others.

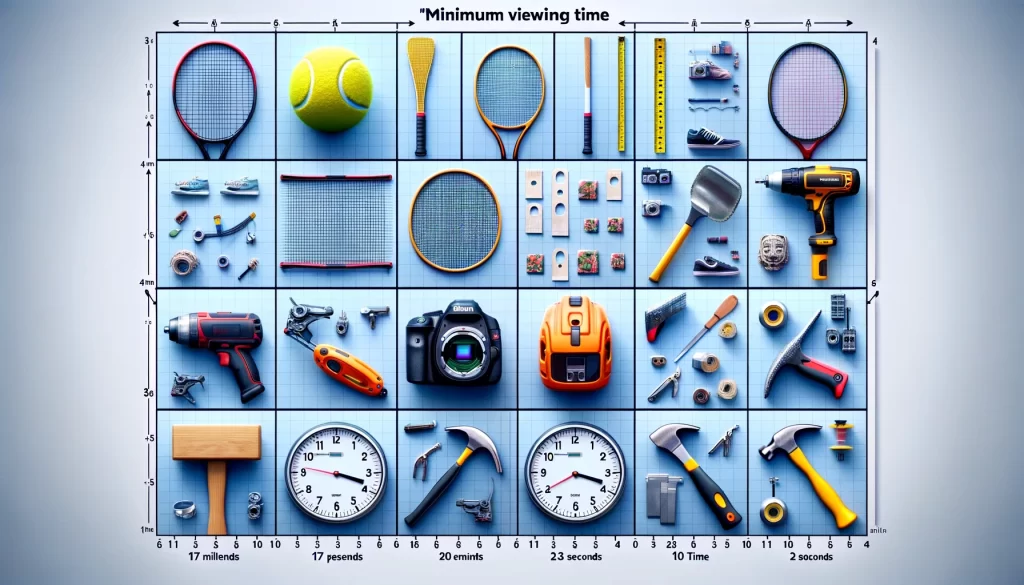

In a bid to understand the challenges AI faces in visual data comprehension, crucial in sectors ranging from healthcare to transportation, CSAIL researchers have explored a new dimension of image recognition – the time it takes humans to identify an image correctly. This exploration led to the development of the MVT metric. David Mayo, an MIT PhD student, and his team utilized a subset of the ImageNet and ObjectNet datasets, exposing participants to images for durations ranging from 17 milliseconds to 10 seconds, asking them to identify objects from a set of options.

The research revealed that current AI models, though performing well on existing datasets, struggled with more complex images requiring longer viewing times. The study showed that while larger AI models improved on simpler images, they made less progress with challenging ones. CLIP models, integrating language and vision, exhibited more human-like recognition.

“Our research demonstrates that the difficulty of images poses a significant challenge, often overlooked in standard AI evaluations. By using MVT, we can better assess model robustness and their ability to handle complex visual tasks,” explained Mayo.

This new approach contrasts with traditional methods that focus solely on absolute performance. The MVT metric helps assess how AI models respond to varying image complexities, aligning more closely with human visual processing. Mayo and his team believe this metric could revolutionize how AI systems are evaluated and trained, especially in applications like healthcare, where understanding visual complexity is paramount.

The study, co-authored by Mayo, Jesse Cummings, and other CSAIL researchers, is set to be presented at the 2023 Conference on Neural Information Processing Systems (NeurIPS). It marks a significant step towards developing AI models that not only match but potentially surpass human-level performance in object recognition.